Facial Expression DA

A generative approach for Facial Expression Data Augmentation

Image-to-Image translation aims to transfer images from a source domain to a target one while preserving the content representations. It can be applied to a wide range of applications, such as style transfer, season transfer, and photo enhancement.

To accomplish this task several architectures have been proposed, including CycleGANs

In the context of Deep Learning, one major problem is the need for huge datasets to effectively train a deepmodel. However, the amount of available images can be limited and dependent on the specific class, leading to unbalanced datasets. To solve this problem, a large amount of different data augmentation techniques have been developed during the last years.

I presented this project with Dr. Francesco Azzoni and Dr. Corrado Fasana to the Advanced Deep Learning Models and Methods exam in Politecnico di Milano.

The work exploits the advancements in Image-to-Image translation to perform data augmentation of facial expression data.

The effectiveness of the proposed method is assessed analysing the classification performance on the unbalanced FER2013 facial expression dataset

Before exploiting ECycleGANs to perform data augmentation, an attempt was made to reproduce the results obtained by Zhu et al.

The original FER2013 dataset

FER2013 dataset

- Gaussian likelihood: the idea is to perform instance selection by removing those instances that lie in low-density regions of the data manifold. This is done by exploiting the method proposed in DeVries et al.

which computes the likelihood of each image using a Gaussian model fit on feature embeddings produced by a pre-trained Inceptionv3 classifier . Then, only those samples with a likelihood greater than a certain threshold are kept. - Confidence Filtering : the idea is that first a classifier is trained on the whole dataset. All the samples are then evaluated using the classifier and ranked according to the probability of belonging to their annotated class. Using as threshold a minimum confidence or a number of samples it is possible to obtain a filtered version of the dataset where most of the ambiguous samples are discarded.

However, even though both these approaches seemed to correctly remove ambiguous samples, the performance reported in the paper

Once the dataset has been filtered, the next step is the implementation and training of the EcycleGAN model. Starting from the existing implementation of CycleGAN provided by

- Instead of the original residual block

of the CycleGAN generator a deeper and more complex one is employed, called RDNB. - The perceptual loss is implemented in place of the original pixel-wise loss.

- The Wasserstein GAN objective with gradient penalty (WGAN-GP)

is implemented as an alternative to the LSGAN objective function to stabilize the training. Both have been tested and compared in the experiments section.

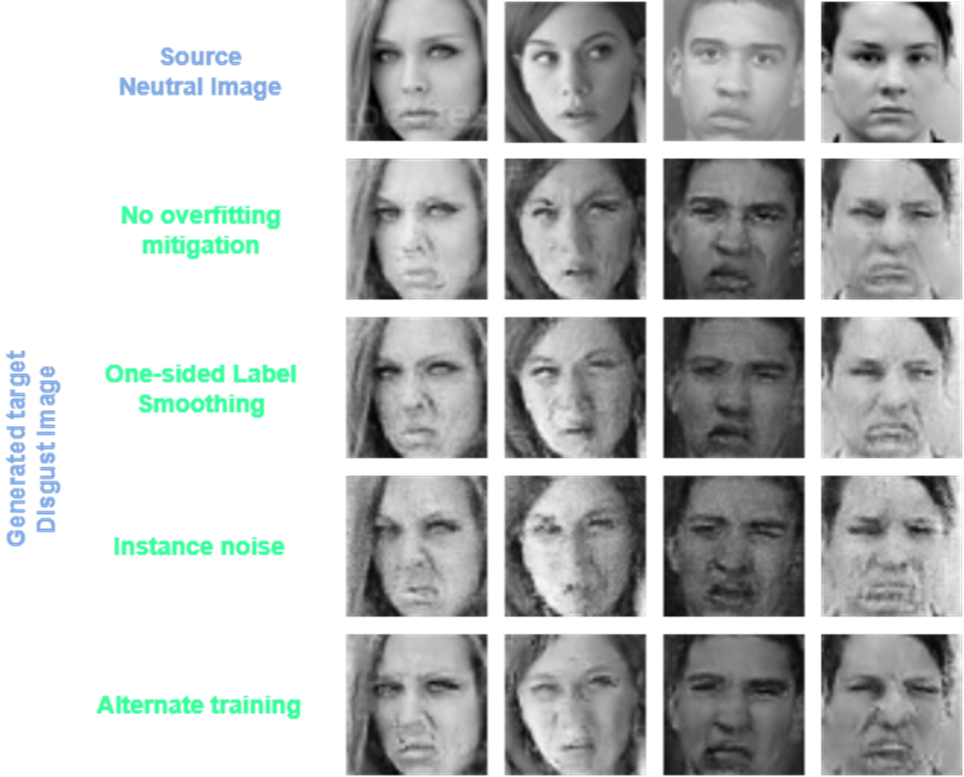

Given the fact that the number of samples of some classes is very limited, the discriminator of such classes tends to overfit. Several GAN-specific techniques have been proposed to mitigate this problem:

- One-sided Label smoothing

: it is concerned with the addition of label noise. More precisely, the discriminator is trained on randomly flipped labels instead of real labels. This label noise is applied only to the samples of the less represented class when fed to the discriminator. - Instance Noise

: in this case, the discriminator sees the correct labels, but its input sample is noisy. This avoids the saturation of the discriminator objective, reducing overfitting. The added noise is Gaussian with zero mean and decaying standard deviation during training. - Alternate training: when the training procedure is initiated (after a fixed number of epochs) the discriminator of the less represented class is not trained at every iteration. Since the number of samples is small the discriminator is prone to overfitting, thus it can discriminate between real and fake samples with very low uncertainty. Hence, training it less frequently than the generator gives the latter more time to learn.

After the implementation of the proposed approaches, to compare the quality of the synthetic data generated using CycleGAN and especially ECycleGAN, several experiments were conducted using different parameters and finally compared. The final performance of the classifier on the original and augmented dataset are assessed using 3x10-fold cross-validation and averaging the results.

For the first experiments, the employed CycleGAN architecture and parameters are the same as

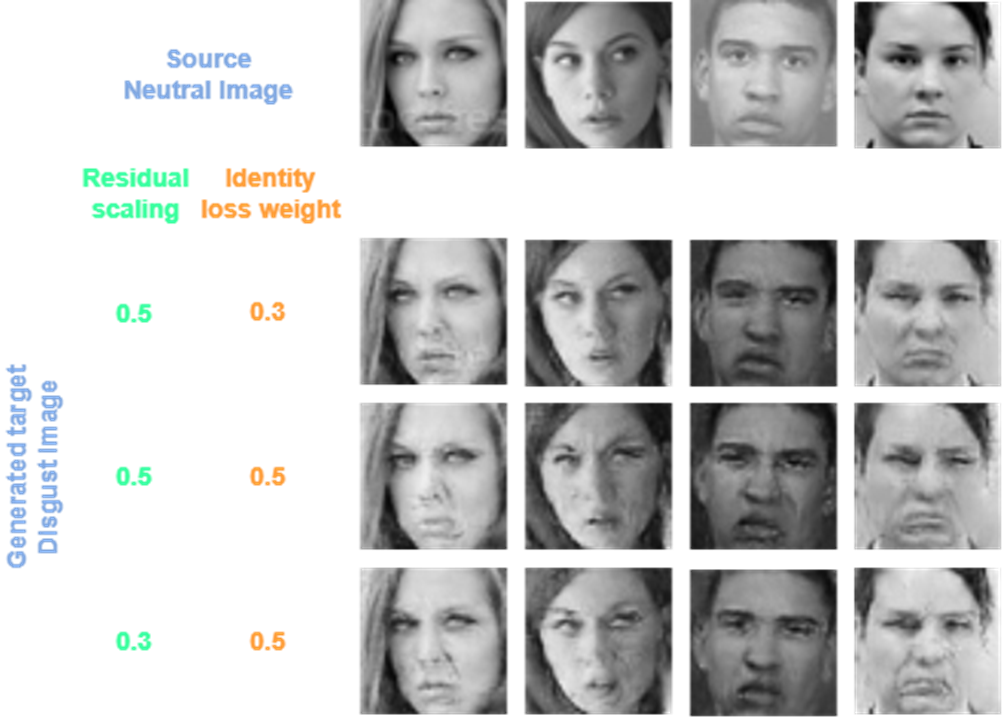

The paper does not consider the identity loss, so different experiments were performed weighting the identity loss in different ways (\(\lambda_{idt} = [0.0; 0.3; 0.5]\)). For each configuration, after training, 100 Disgust images are generated starting from a fixed set of Neutral images and added to the original dataset to assess the impact on the classifier performance. Initially, the cycle consistency loss weight was set to \(\lambda_{cyc} = 10\) as in the paper

The experiments of ECycleGAN started replicating the same base configuration reported in Zhang et al.

Unfortunately due to the limited available resources neither training such a powerful network nor performing a complete hyper-parameters tuning is feasible. Thus, the number of dense blocks and sub-blocks is decreased to 2 and 3 respectively. Given the poor results and the divergence problems that arose using the proposed WGAN-GP, the following experiments were performed using LSGAN

Given that the discriminator of the less represented class tends to overfit in the previous models, the model providing the more promising results was selected and enhanced with the techniques previously to try to mitigate this problem. More specifically, Label-smoothing was applied with a label flip probability of \(1\%\). Instance-noise was implemented using a Gaussian noise starting with a standard deviation of \(0.1\) and \(0.05\) then linearly decaying, while in the case of Alternate-training the training ratio generator-discriminator was set to \(10:1\) or \(5:1\). As done for CycleGAN, for each trained model, 100 Disgust images are generated starting from the same fixed set of Neutral images for better comparison and added to the original dataset to assess the impact on the classifier performance. Then, the most promising model is used to generate also 200 and 500 images to check whether a bigger augmentation can further boost the classifier performance. Finally, a few experiments were performed to generate Surprise images from Neutral ones, to check the impact of the imbalance gap.

The obtained results were evaluated both qualitatively and quantitatively. In particular, a qualitative evaluation of the images generated by each experiment was performed first. Then, a quantitative evaluation of multi-class classification was assessed using different metrics.

- Mean Precision.

- Per-Class Precision.

- Mean Receiver Operating Characteristic - Area Under the Curve (ROC-AUC).

- Per-Class ROC-AUC.

The idea is that the Precision can provide a first very intuitive indication of the classifier’s capabilities, while the AUC tells how much the model is capable of distinguishing between classes (in a one-vs-all setting).

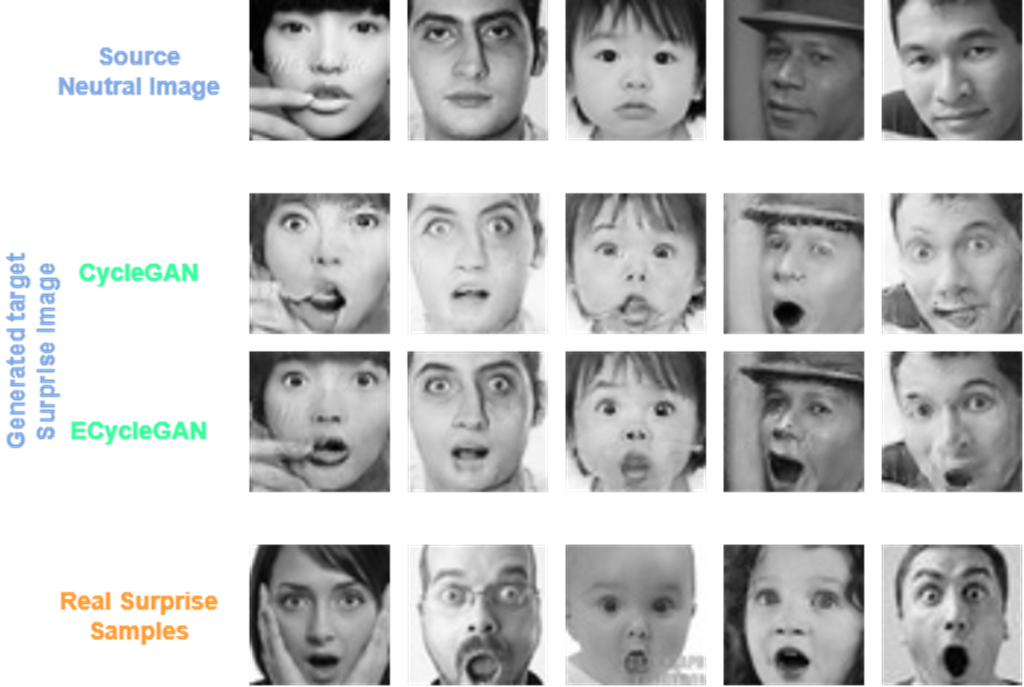

The main improvement from CycleGAN to ECycleGAN is related to the quality of the generated images.

Also, the ratio between the number of images of the two domains, and the consequent loss behavior, have a huge impact on the quality of the generated samples. The smaller the gap (e.g., Neutral-Surprise translation), the better the quality.

The techniques previously proposed to tackle the overfit were adopted to force the loss behavior of the Neutral-Disgust experiment in which the samples gap is far larger, to be similar to the Neutral-Surprise one, wishing to increase the quality of the results. While the loss behavior was effectively changed as desired, the quality of the images does not show a convincing improvement.

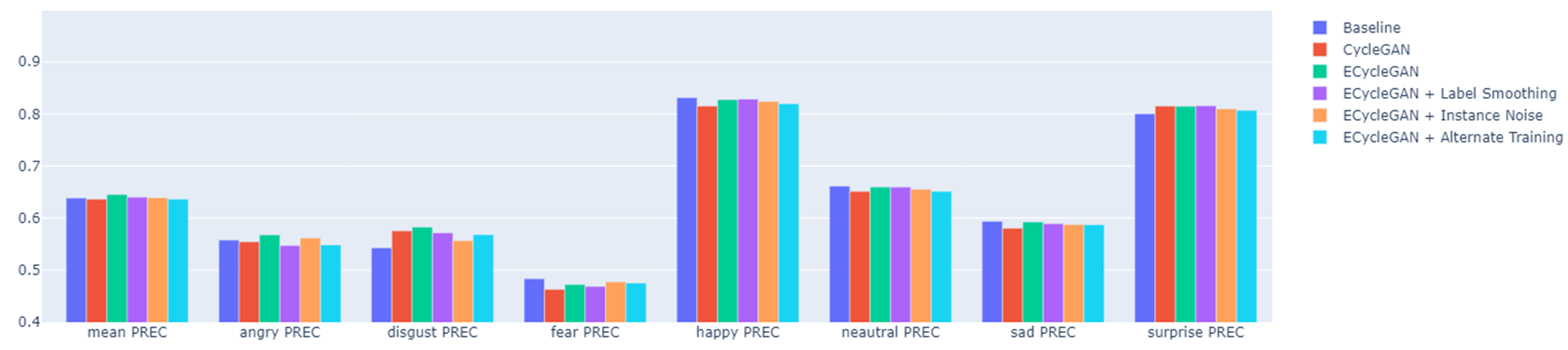

The same classification setup is used for all the experiments, taking into consideration previously cited metrics for evaluation. The classification performance on the non-augmented filtered dataset is used as the baseline.

Among the CycleGAN experiments there is a noticeable improvement in the Disgust precision w.r.t. the baseline, but at the same time the other classes’ precision decreases, resulting in a mean precision that is similar to the baseline one. This is because only the samples that are clearly disgusted are classified as such, reducing the false positives of class Disgust. The other less certain Disgust samples are assigned to other classes, increasing their false positives and reducing their precision. According to the AUC the quality of the augmentation depends on the value of \(\lambda_{idt}\) used: the higher the value the lower the AUC. Thus, it is possible to derive that synthetic samples that have features similar to real faces (more probable with higher \(\lambda_{idt}\)) are more easily misclassified since the stronger identity constraint does not allow to make them look disgusted. Thus, CycleGAN architecture is not powerful enough to allow better discrimination between classes, leading to a mean AUC which is similar to that of the baseline.

Regarding the ECycleGAN the first experiments were performed to establish the best value of residual scaling (\(\alpha\)) and identity loss weight \(\lambda_{idt}\) hyper-parameters. When using \(\lambda_{idt} = 0.5\) and \(\alpha=0.5\) the best overall improvement is obtained for both metrics. Different values of these parameters cause input images to be modified too much or not enough. Using the best model, different techniques to avoid overfitting were employed, however even though there is an improvement concerning the generator loss convergence, no significant performance boost was observed. Increasing the number of augmented images to 200 or 500 decreases the performance due to the introduction of too many bad-quality samples. Finally, considering a more represented class such as Surprise, the ECycleGAN qualitative performance is surprising also w.r.t. CycleGAN. However, there is still not much improvement in the classifier performances since this class is already quite distinguishable from the others and thus, the introduction of some lower quality samples can even slightly decrease the performances w.r.t. the baseline.

Our experiments show that ECycleGAN can be more effective than CycleGAN for Facial Expression Data Augmentation. Despite the quality of some generated samples is good, the influence of bad generated samples is too high to augment the dataset significantly. While introducing a low number of generated samples (around 100) leads to a limited performance boost, when introducing more samples (from 200 on), the major influence of the bad-quality ones results in a degradation of the classification performances since the intra-class diversity increases too much.

However, the improvement w.r.t. the previously proposed CycleGAN model is present from both qualitative and quantitative viewpoints.

Further experiments and studies could be conducted by adopting the complete ECycleGAN architecture and performing a finer hyper-parameter tuning if resources are available. Moreover, other filtering methods for the input dataset could be explored, as well as an instance selection algorithm to choose the best samples generated by ECycleGANs. Other techniques to avoid overfitting the discriminator could be considered. Finally, pretraining the VGG19 network used for the perceptual loss with a pretext task on human faces could help to extract more suitable features to be considered for the consistency losses, boosting the performance.

You can find the implementation in the linked repository.